Recently someone brought up a question on Reddit as to why their DynamoDB API’s are getting throttled at 1k WCU even though their table is setup in on-demand billing mode.

Let me try to explain this behavior starting with some under the cover behavior of DynamoDB.

Partition capacity

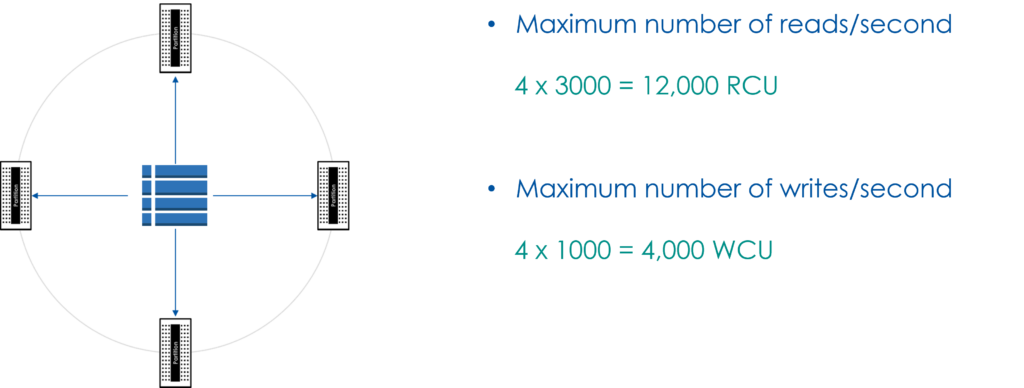

When you create a DynamoDB table, the service allocates a set of partitions to the table. The maximum I/O capacity available to the table is determined by the total number of partitions. Each partition is capped at:

- 10 GB storage

- 1000 Write Capacity Units

- 3000 Read Capacity Units

Example of a table with 4 partitions:

The peak capacity available to the table is determined by the number of partitions, for short bursts table can exceed this capacity for a finite amount of time but a sustained load for longer duration can exceed the burst capacity available to the table. Now the question you may ask is why my on-demand table didn’t scale? lets dive a bit deeper into it.

I’m trying to insert a million records all with different partition key PK but the same sort key SK. I’m getting throttled at 1k WCUwhich is the maximum write for a single partition but my partition key is unique for every single record ….. …..

— User, Reddit

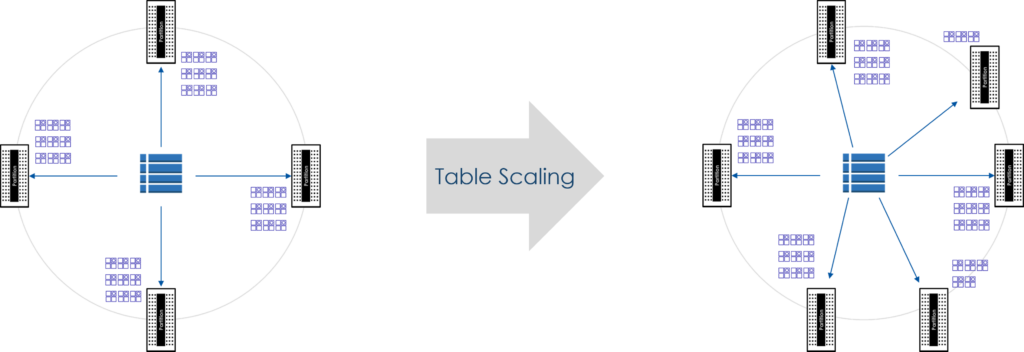

Table Scaling

DynamoDB continuously monitors the usage of your table. As the usage grows, DynamoDB adds additional partitions to your table. Under the covers DynamoDB is tracking your storage and I/O usage in CloudWatch. This target tracking mechanism initiates the auto scaling & re-partitioning behavior.

Home run

So then how does the above explain the behavior observed? Important thing to keep in mind is that Target Tracking mechanism does not lead to instantaneous scaling, it takes a finite amount of time. Large number of writes in a short duration does not give DynamoDB enough time to add partitions and carry out re-partitioning of items. This can cause throttling even in case of an on-demand table!!

Let’s say you created a new on-demand table; for this discussion assume there are :

- 10 partitions allocated

- Item size is 1 K

- You have 1 million items that you would like to load consecutively

- Assume that each item has a unique Partition Key and that means items will be distributed uniformly across the 10 partitions

When you will initiate sequential writes of 1 million items, each partition will receive ~100 K writes (1M divided by 10). This may be spread across multiple seconds i.e., not all records are added within the first second. This sudden burst of writes against the table will trigger scaling but the write capacity usage is sustained and scaling didn’t get a chance to scale up & hence throttling.

Remedy

What we have seen here is that bulk loading of data can lead to throttling even if the table is setup with a billing mode of on-demand. There are couple of ways to do bulk load.

- S3 table import

- Use EMR or Glue or DMS

Disclaimer

I have not tried the suggestions personally; suggestions are based on personal experiences. Highly recommended that you test these suggestions before commiting to it.

What if I want to write my own bulk loading logic?

In that case just slow down your calls to write item 🙂 and use BatchWriteItem instead of PutItem. In fact you may gradually ramp up the BatchWriteRequests instead of just wait/sleep between batches. This way you will be giving enough time to DynamoDB to scale up your table.

What if I don’t want this complex ramp-up logic?

You may be able to do it by setting table with provisioned mode and then switching it to on-demand.

- Set up the table with provisioned capacity high enough to accommodate your bulk-write load

- Under the covers DynamoDB will allocate partition capacity for your peak writes

- Do your bulk load right-away as you don’t to pay for high provisioned capacity

- Once the load is done, switch table to on-demand

References

DynamoDB guide for Architects & Developers

Join Raj’s course

Read/Enroll in course on UDEMY (discount applied)